To participate in the EVENTA 2025 Grand Challenge, please first register by submitting the form.

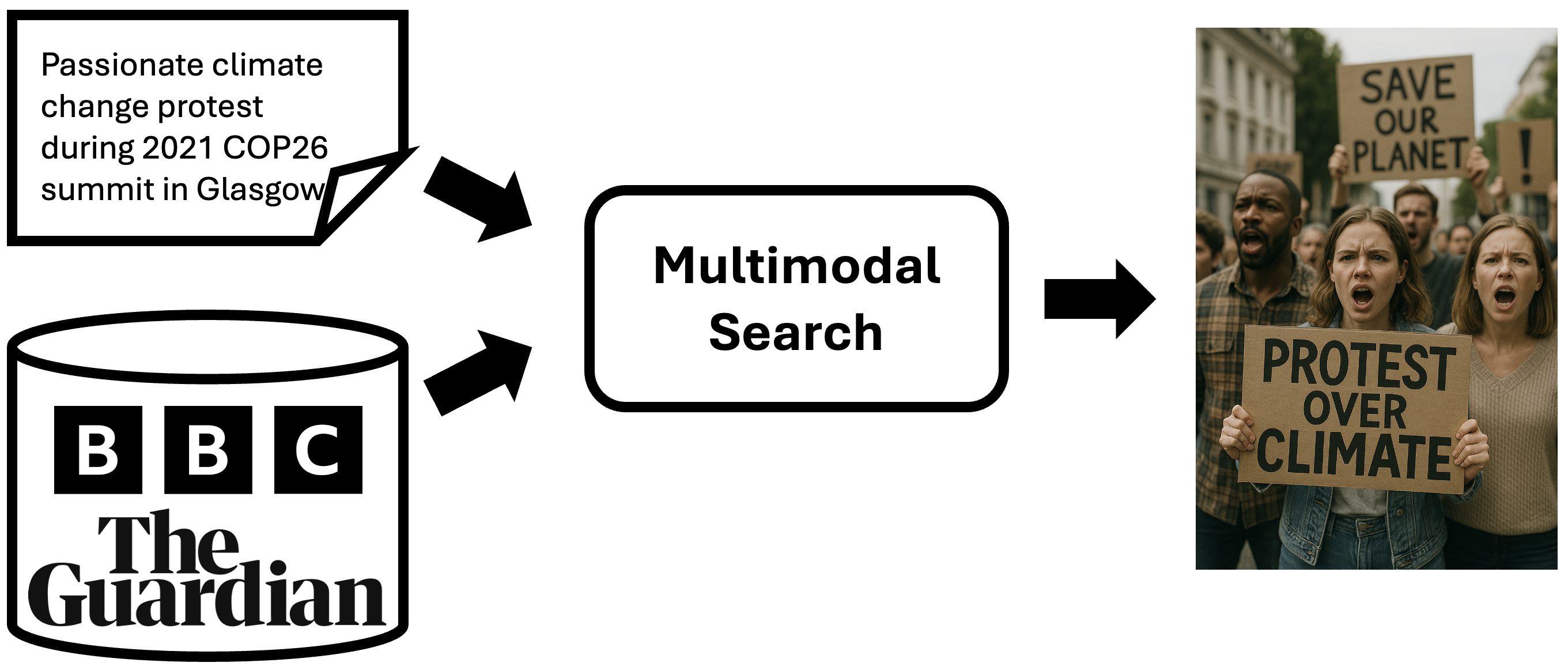

Given a realistic caption, participants are required to retrieve corresponding images from a provided database.This retrieval task is a fundamental task in computer vision and natural language processing that requires learning a joint representation space where visual and textual modalities can be meaningfully compared. Image retrieval with textual queries is widely used in search engines, medical imaging, e-commerce, and digital asset management. However, challenges remain, such as handling abstract or ambiguous queries, improving retrieval efficiency for large-scale datasets, and ensuring robustness to linguistic variations and biases in training data. This image retrieval track aims to tackle issues of realistic information from events in real life.

Participants must submit a CSV file named using the following format: submission.csv. This file must be compressed into a ZIP archive named submission.zip before uploading it to the CodaBench platform.

The CSV file should contain predictions for all queries in the provided query set. It must include 11 columns, separated by commas (,), with the following structure:

- Column 1: query_id — the unique ID of the query text

- Columns 2–11: Top-10 retrieved image_id values, ordered from most to least relevant (top-1 to top-10). If an image cannot be retrieved, use

#as a placeholder.

CSV Row Format Template:

query_id,article_id_1,article_id_2,...,article_id_10 <query_id>,<article_id_1>,<article_id_2>,...,<article_id_10> <query_id>,<article_id_1>,#,...,#

We provide a submission example:

query_id,article_id_1,article_id_2,...,article_id_10 12312,56712,56723,56734,56745,56756,56767,56778,56789,56790,56701 12334,56712,#,#,#,#,#,#,#,#,# 12345,56712,56723,56734,56745,56756,#,#,#,#,#

Submission Requirements:

- Each query_id in your submission must be unique and must come from the official provided list.

- All image_id values must correspond to valid entries in our image database.

- There is no requirement to sort the rows by query_id — this will be automatically handled during evaluation.

- Your raw

submission.csvfile must not contain any blank lines. - Submit the

submission.csvfile as a single ZIP file namedsubmission.zip— do not include any containing folder. - The

submission.csvmust include all required headers and columns, in the exact order as shown in the example file.

Submission Limits:

- For public test submissions, each team is allowed a maximum of 10 submissions per day and a total of 150 submissions.

- For private test submissions, each team is allowed a maximum of 10 submissions per day and a total of 150 submissions.

Participants are also required to submit a detailed technical paper via the CMT platform to validate their approach and results.

To fairly and comprehensively evaluate submissions in this challenge task, we define an Overall Score that integrates key evaluation criteria using a principled and balanced approach.

The Overall Score combines the following five metrics:

| Metric | Description |

|---|---|

| mAP | Mean Average Precision – measures overall retrieval precision |

| MRR | Mean Reciprocal Rank – measures how early the first correct item is retrieved |

| Recall@1 | Whether the correct item is ranked first |

| Recall@5 | Whether the correct item is within the top 5 results |

| Recall@10 | Whether the correct item is within the top 10 results |

These metrics are combined using a weighted harmonic mean, which penalizes poor-performing metrics more strongly than an arithmetic mean and encourages balanced improvements across all criteria. The formula is:

\[ \text{Overall Score} = r \cdot \left( \frac{\sum w_i}{\sum \left( \frac{w_i}{m_i + \varepsilon} \right)} \right) \]Where:

- mi is the value of the i-th retrieval metric

- wi is the weight for the i-th metric

- ε is a small constant (e.g., 10-8) to avoid division by zero

- r = (#valid queries) / (#total ground-truth queries)

Default Weights: [0.3, 0.2, 0.2, 0.15, 0.15] for [mAP, MRR, R@1, R@5, R@10]

This Overall Score will be used as the official ranking metric for evaluating all participant submissions on the retrieval leaderboard.

| Rank | Team | Overall | AP | Recall@1 | Recall@10 | recall5Score | recall10 | Code | Report |

|---|---|---|---|---|---|---|---|---|---|

| 1 | NoResources | 0.577 | 0.563 | 0.563 | 0.469 | 0.69 | 0.744 | Github | |

| 2 | 23Trinitrotoluen | 0.572 | 0.558 | 0.558 | 0.456 | 0.698 | 0.762 | Github | |

| 3 | LastSong | 0.563 | 0.549 | 0.549 | 0.449 | 0.695 | 0.738 | Github | |

| 4 | Sharingan Retrievers | 0.533 | 0.521 | 0.521 | 0.428 | 0.64 | 0.705 | Github | |

| 5 | ZJH-FDU | 0.368 | 0.361 | 0.361 | 0.27 | 0.491 | 0.525 | Github |